Software sizings: both art and science

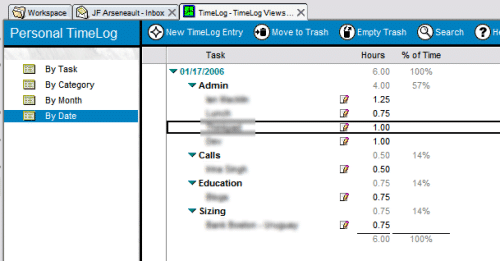

I’ve started performing Software Sizings for our clients recently. This is where given a set of metrics and business objectives, you estimate the amount of hardware required to support a given (WebSphere) workload over a certain topology. Sounds simple, but the devil’s in the details…

Let’s illustrate with an example. Customer A wants to deploy an application that’s not yet developed and would like to know how many servers/processors they will need to support it in production. There are a set of questions to be answered before we can even start:

- What topology are you considering for deployment (single tier, two tier, n-tier)?

- What edition and version of WebSphere Application Server will you be running on?

- What technology platform will you be using (Windows, Linux, iSeries, pSeries, zSeries, etc)?

- What Pattern for e-Business best represents the type of deployment considered?

- Will authentication be required? (impact on processing requirements)

- What is the desired processor utilization rate?

- What is the anticipated transactional volume, and what is the nature of that volume

There are many more technical questions, but this gives you a feel for what is needed before even trying to crunch numbers. But we can’t forget the non-fonctional requirements either, can we?

So we’ll discuss the client’s requirements when it comes to disaster recovery, redundancy, scalability, availability, maintainability, and all the other ilities. This will often help determine the form factor and expandability of the underlying hardware, and if we’ll go for horizontal (more machines) or vertical (more processors/nodes) scaling.

There are also a number of assumptions we need to lay down, given the customer’s current technology (and business) state:

- Since the application isn’t built, we should use a high contingency factor, because there are still so many unknowns (how will the application behave, how will it scale, etc)

- We apply an average complexity factor to the application, since we don’t know yet how it’ll operate

- We can’t tell how the network is built, and will assume the latency to be minimal?

Once we have all the data we need, and understand the business context of a client, we can actually perform the sizing, using a mix of tools and reference tables from the labs, which tell us the relative performance of our products in difference scenarios.

As you can see, Software Sizing is far from an exact science. Actually, it feels more like a scientific experiement, where we decide on a number of assumptions and a hypothesis and we then verify that hypothesis and come out with a range of answers, which we can interpret in a few different ways.